article Artificial Intelligence

The Abstractions, They Are A-Changing

We’re Beginning to Understand What’s Next

article Artificial Intelligence

AI’s Swiss Cheese

Takeaways from My Conversation with Matthew Prince of Cloudflare

article Artificial Intelligence

Context Engineering: Bringing Engineering Discipline to Prompts—Part 1

From “Prompt Engineering” to “Context Engineering”

podcast

Generative AI in the Real World: Jay Alammar on Building AI for the Enterprise

0:00

/

0:00

article

The Future of Product Management Is AI-Native

video

Should Product Managers Use AI to Build Prototypes?—Marily Nika Live with Tim O'Reilly

article

What Ants Teach Us About AI Alignment

article

Radar Trends to Watch: August 2025

article

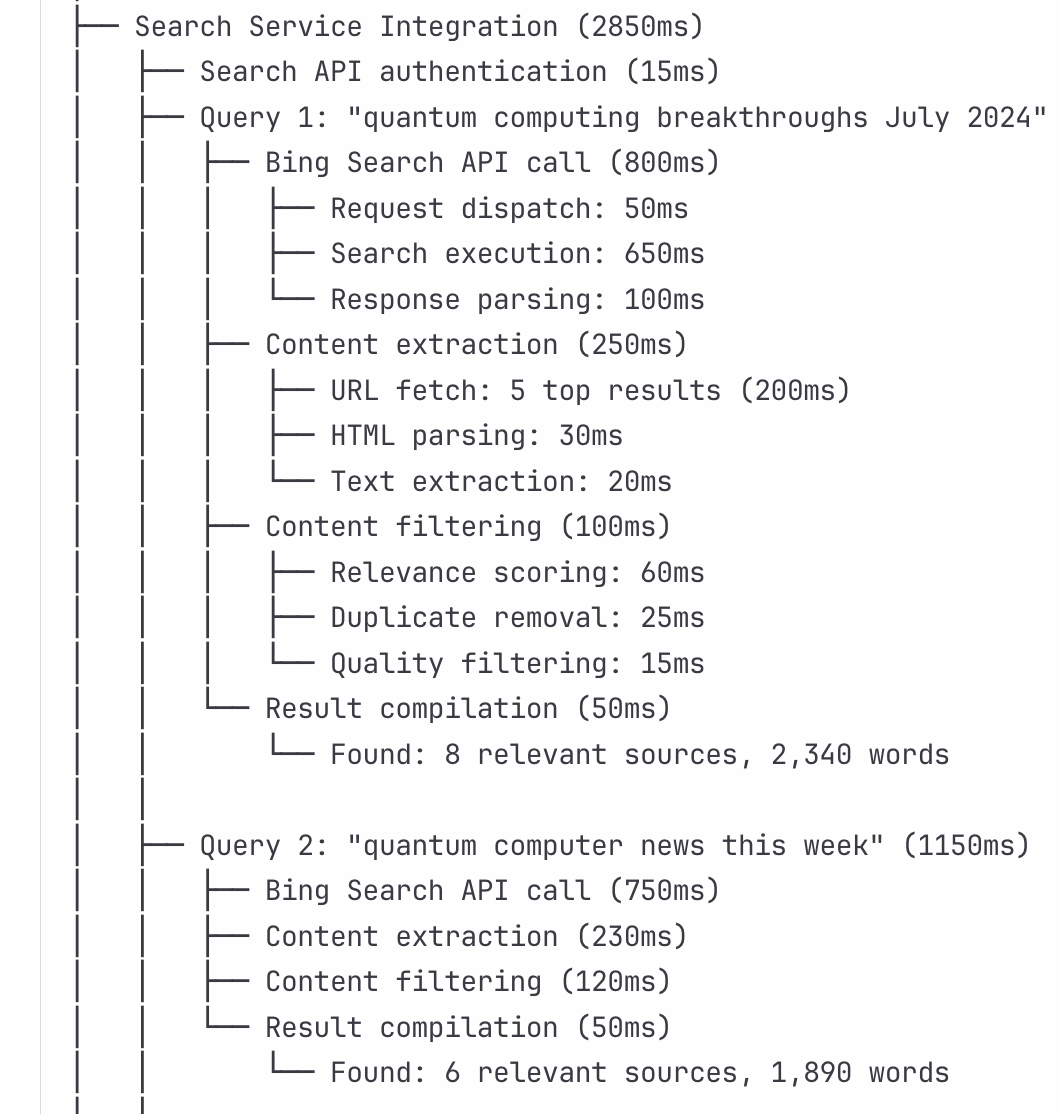

The Observability of Observability

article

Protocols and Power

article

Interfaces That Build Themselves

article

Protocols and Power

article

Hanoi Turned Upside Down

article

AI and Education

article

OpenAI’s Telemetry

podcast

Generative AI in the Real World: Phillip Carter on Where Generative AI Meets Observability

0:00

/

0:00

article

From REST to Reasoning: A Journey Through AI-First Architecture

article

How Human-Centered AI Actually Gets Built

article